University of Colorado

Aurora, CO

Program Director: Andrew Luks, MD

Program Associate Director: Ricky Mohon, MD

Program Type: Pediatric Pulmonary

Abstract Authors: Erin Khan, MD; Ricky Mohon, MD

Description of Fellowship Program: The Pediatric Pulmonary Fellowship at the University of Colorado has a long and successful history of training pediatric pulmonologists that now practice throughout the country. Our program is committed to providing each trainee with exceptional educational experiences that will help him or her acquire the knowledge, skill and technical expertise to become a superb pediatric pulmonologist. Many have gone on to have distinguished careers as recognized, outstanding leaders in pediatric pulmonology locally, nationally, and internationally.

Abstract

Background

The Next Accreditation System was brought to all ACGME-accredited programs in 2013, and prompted evaluations of learners based on six specific competencies coined Pediatric Milestones (PM). The PM are an important and comprehensive way to track a learner’s progress. A sub-group developed PM for pediatric subspecialties in 2015. There are 21 subspecialty PM. Previously at our institution, each faculty member would rate a fellow in all 21 PM categories using evaluations on a Likert scale and provide additional narrative comments after any clinical contact with the fellow. This resulted in lengthy, time consuming evaluations for the faculty to frequently complete. The Clinical Competency Committee (CCC) reviews the evaluations and provides a summarized numerical score for each fellow in all PM. Faculty, fellows, and the CCC felt these evaluations lacked meaningful feedback.

Methods

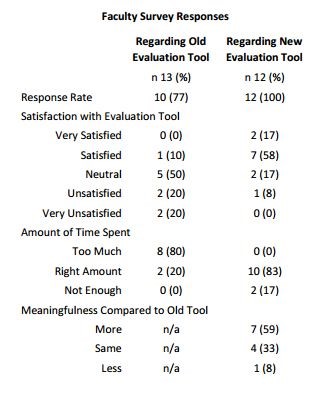

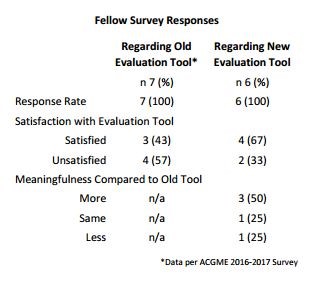

A new succinct evaluation tool, consisting of only four questions, was created and implemented in academic year (AY) 2017-2018. The shortened tool allows the faculty member to provide qualitative comments on PM categories that he or she observed while working with the fellow. The CCC will review the evaluations and assign a numerical score for each of the fellow’s 21 subspecialty PM. Separate survey instruments were created to assess faculty satisfaction and time spent on both the old and new evaluation tools. To assess fellows’ satisfaction with faculty feedback after assignments, we used data from AY 2016-2017 ACGME survey to compare with a similar follow-up survey. Fellows and faculty were asked for their opinion on the meaningfulness of feedback provided via the new evaluation tool compared to the old tool. Free text comments were allowed and all responses were anonymous.

Results

The faculty surveys had excellent response rates with 77% (10/13) completing the survey regarding the old evaluation tool and 100% (12/12) for the new tool. Overall, faculty were not satisfied with the old tool with only 10% reporting “satisfied” and 80% reporting taking “too much time” to complete the old evaluations. Only 10% of faculty reported reading the lengthy prompts that accompanied each of the 21 Likert scale ratings for every question. In the follow up survey, 75% of faculty reported they were “satisfied” or “very satisfied” with the new evaluation tool and 83% reported taking “the right amount of time” to complete. When asked to compare meaningfulness of feedback, 59% stated they felt they were able to provide more meaningful feedback, with 33% stating the same level of meaningfulness. All of our fellows (7/7) responded to the ACGME annual survey for 2016-2017. Only 43% of those fellows reported being satisfied with feedback after assignments, compared to 72% of the national average. In the follow up survey we provided to fellows for AY 2017-2018, 100% of fellows responded (6/6). There was improved satisfaction, with 67% of our fellows reporting being “satisfied” with the new feedback form. For those reporting “unsatisfied”, comments were made regarding lack of feedback in person as well as lack of comprehensive feedback comments as sources of discontent. Of those who had experienced both evaluation tools, 75% reported more or the same level of meaningfulness in feedback via the new tool.

Conclusions and Future Directions

Feedback is crucial to the development of academic and clinical skills in a fellow. Meaningful feedback for learners should be direct, timely, and comprehensive. Short qualitative evaluations completed by faculty are more likely to produce meaningful feedback for fellows. Further assessment of the new evaluation tool will be performed after each semi-annual meeting of the CCC to monitor the committee’s workflow changes in tracking each fellow’s progress.